AI/ML Models Training Simplified

We were taught in university why we need a CPU; now, let me walk you through why we need a GPU.

1. What is AI/ML Learning (Training)?

Definition: Training (or learning) is the process of teaching a machine learning model using a dataset.

Goal: The model learns patterns and relationships in data.

Steps in Training:

Input data is fed into the model.

The model makes predictions.

The predictions are compared with actual values (labels).

The error (difference) is calculated.

The model updates its parameters using optimization techniques like gradient descent.

Steps 1–5 are repeated many times until the model is optimized.

Why GPUs?

Training requires performing millions of mathematical operations (matrix multiplications, tensor computations, etc.).

GPUs have thousands of cores that can process these operations in parallel, making training much faster than CPUs.

2. What is Inference?

Definition: Inference is when a trained model is used to make predictions on new, unseen data.

Goal: Use the trained model to get useful outputs.

Steps in Inference:

New input data is given to the trained model.

The model processes the input and makes a prediction.

The output is used for decision-making.

Why GPUs?

Inference is typically less computationally intensive than training but still benefits from parallel processing.

Some applications require real-time inference (e.g., self-driving cars, speech recognition), where GPUs help achieve fast results.

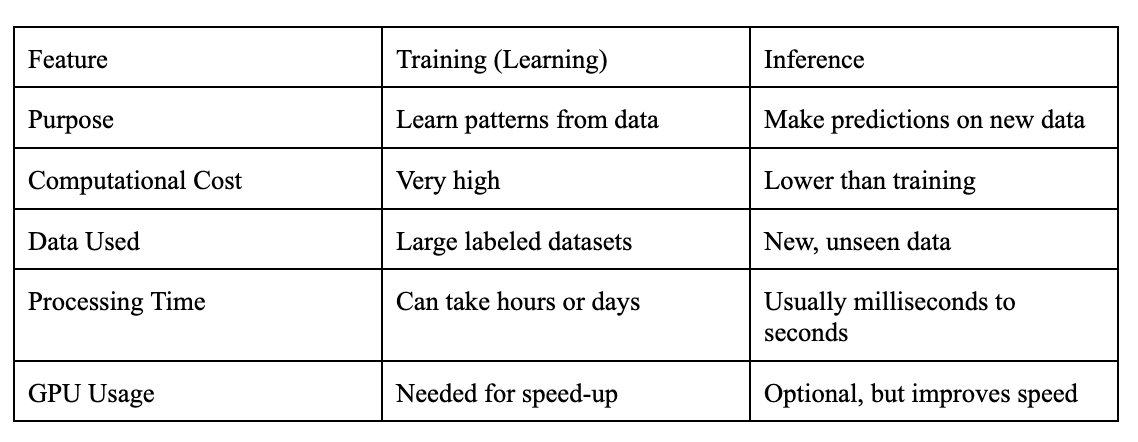

3. Key Differences Between Training and Inference

4. Example: Training vs. Inference in an AI Model

Imagine you're building an AI that recognizes cats and dogs in images.

Training Phase:

You feed thousands of labeled images of cats and dogs into a model.

The model learns features like fur texture, ear shape, and size.

It adjusts its internal parameters to improve accuracy.

Inference Phase:

You give the trained model a new image of a pet.

The model analyzes the image and predicts: "This is a cat."

The prediction is made based on what it learned during training.

5. When Do We Use GPUs?

Training: Always recommended for models learning because it's computationally expensive.

Inference: Can run on CPUs for small-scale tasks, but GPUs help speed up real-time applications.

Wrapping Up

GPUs play a crucial role in both AI/ML training and inference:

Training is where the model learns, requiring massive computational power.

Inference is when the trained model makes predictions, benefiting from GPU acceleration for speed.

Both stages power real-world AI applications, from chatbots to self-driving cars!

I hope this serves as a good starting point for you to dive deeper. Keep in mind that it’s not rocket science.

Until next time,

Adlet

🎉 Nearly 700 Readers soon! Thank You for Your Support!

You’re the real MVPs!

Loved this post? 💙 Hit that like button—it means the world to me and helps me grow.

Know someone who’d find this helpful? ♻️ Share it with them! Let's spread the knowledge and keep inspiring each other.

Stay awesome!